But first, a bit of background, or lack there of…

I like giving credit where credit is due. Ever since I started streaming my sets in 2014, I knew I wanted some way of showing what was playing. Once I moved to video, it was pretty easy to do, albeit a little fragile. I discussed briefly this in one of the Studio Setup posts, but let’s take another look at how I actually pulled it off.

This is a screenshot from one of my streams back in 2015, and I’m pretty sure it’s the only screenshot I have. I know I still have audio recordings of these, but no video. Take a look at the lower right portion of the screen. This little banner with the song title and artist is called a “Lower Third”. That’s a video industry term for something that, unironically, appears in the lower third of the video. Lower Thirds are usually used for titling someone or something, like I’m doing here.

The way I got this data back then was pretty interesting… You might notice that font is the exact same as Traktor’s font – at least back in the Traktor 2 days – the font is much nicer now.

Well, it’s the same because that is actually Traktor’s window. I used to screen capture both the track data parts of the Traktor application, just around the title and artist, and then use my broadcast software to color key out the gray background leaving only the text behind. Then some fancy macro work would slide the lower third graphic (the blue bar) in, wait a second or two, then fade in the text. It was very CPU/GPU intensive.

It was also super error prone… If I pushed the wrong button, I would end up with both graphics on screen at once, with 2 layers of text. I eventually made an A>B and B>A button that did the work for me, but it was still very temperamental.

I also had absolutely no control over the font. Sure, I could change it in Traktor to something else, but that would change it for the entire interface, and that was a hard sell back in the days where my main monitor’s resolution was 1280×720, maybe 1920×1080.

Strap in – it gets technical past this point…

*MIDI has entered the chat*

That screen capture setup lasted me a while, but I really wanted control over the font. I started digging into ways to get this data out of Traktor directly. I’m pretty sure at one point, my disassembly skills came into play and I started trying to find a way to do this directly from within Traktor, or at least poke at where it keeps it in memory. I remember that not being fun and giving up. Another crazy idea included trying to read whatever temporary NML file the History playlist uses because those are at least machine readable in some fashion (but it doesn’t appear to do that).

I did consider doing it with the streaming settings in Traktor, but that was more software and overhead that I didn’t want to deal with, and the time I tried it I wasn’t exactly happy with the output.

I wanted something simple. Artist and Track in their own separate text files, for each deck, A and B.

I did not expect MIDI to be the answer. If you’re completely unfamiliar with what MIDI is, go read up on that briefly, and then come back here.

I stumbled across https://github.com/Sonnenstrahl/traktor-now-playing – or some variant of this. If you don’t want to read that entire README.md, here’s the gist of what’s going on.

- Turns out there’s some piece of Denon DJ gear (the Denon HC4500) that has LCD screens on it.

- This Denon HC4500 receives all the data to display on those screens via MIDI.

- Sonnenstrahl and some folks attributed to this thread on Native Instruments’ forums dissected the MIDI traffic to read the font codes that Traktor would send to the Denon.

- Code was written (in NodeJS) to pretend to be the Denon and accept the MIDI data from Traktor and read out what was being sent, and translate the LCD characters to normal ASCII text characters.

- It outputs exactly what I want, Artist and Track from each deck, just to the Node console.

This blew my mind. I know a good bit of Node and I am great with MIDI, so all I had to do was make it do my bidding. A few minor changes, and we’re saving each of the strings to their respective files. I now have 4 text files that get updated automatically when the track is changed.

It can’t be that easy! What’s the catch?

Of course there’s a catch. There’s always a catch. In this case, it’s spelled out in the original README.md.

Once you load a Track onto your Deck it will display…after 10-20secs (this is due to Denon sending the Chars one by one)

Yeah, it’s a bit slow, and the longer the track or artist string is, the longer it’s going to take to render your text file.

There’s no real way to get around this. I have come up with a way to know that it’s done writing the file – but I’m going to save that knowledge drop for a production post later on when I discuss how I put these text files to use and how my live graphics compositing system works.

One smaller caveat is that if there’s not a matching character from the Track or Artist data, sometimes the character is replaced with a box character. This happens infrequently, and I try to pre-sanitize songs if I think it will be an issue. If you look at the font mapping pictures in Github, you’ll see that the LCD character set can handle most accented characters, umlauts, circumflex characters, and a pretty wide range of other symbols. It’s honestly not too shabby for an LCD screen on a DJ controller from circa 2009!

That being said, if you play a lot of songs where artists have stylized their titles or artist names heavily with other Unicode characters, you might run into the unknown character issue and just get a box character as a replacement. Just something to watch out for if you’re a stickler for perfection…

With all that being said, I’d like to conclude by making available my fork of the original code that outputs to text files of your choosing.

You can find my version at https://github.com/LKHetzel/traktor-now-playing

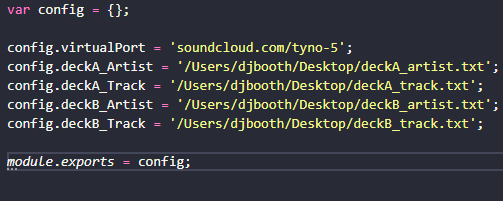

You can find out how to make it work under “This Fork Does”, but as long as you understand how to make a simple Node app run, just edit the 4 file variables in the config.js file and you’re good to go. You do need to point them at existing files, though. For example, on my Desktop on the laptop, I created 4 blank text files, saved them as specific names for each deck’s artist and title, and updated the file as so:

That’s it. Running the Node app as shown in the README.md updates these files as soon as the track is fully loaded in Traktor. It’s important to note this because if you’re analyzing on import/first load or using the Beatport or Beatsource integrations, it will not start writing the text until the song is done downloading and is playable. There’s nothing that can be done about this – Traktor will not start sending the data over MIDI until then.

So, what next?

I don’t really plan on making too many more updates to this code. It fits my workflow perfectly, and should be usable for anyone that understands how to put text file information on screen in their broadcast software. I don’t have a Denon HC4500, I don’t want to track one down to see what else that LCD screen could possibly have on it, and nor do I care what else it could do.

If I have time, maybe I’ll get it updated to run on a more recent version of Node. Or if you’re a fancy pants NodeJS person, you could clone the code, and open a PR against it. I’m happy to pull your updates and test it out, and merge if it makes the cut.

I don’t really intend on providing too much support for this, but if you run into issues you can pop into the #production-stuff channel on the Digital Identity Discord and if I’ve got time I’ll try to help you debug it.

I think my time is better spent begging Native Instruments to just make track data available this way, or at least give us some sort of API to write it ourselves.

My next post in the Tech Tales section is doing things with the Mixcloud API, which I think is also available on my Github but I’m not quite done polishing that enough to write about it.